Author: Shitanshu Bhushan

-

Speeding Up Llama: A Hybrid Approach to Attention Mechanisms

12 min read -

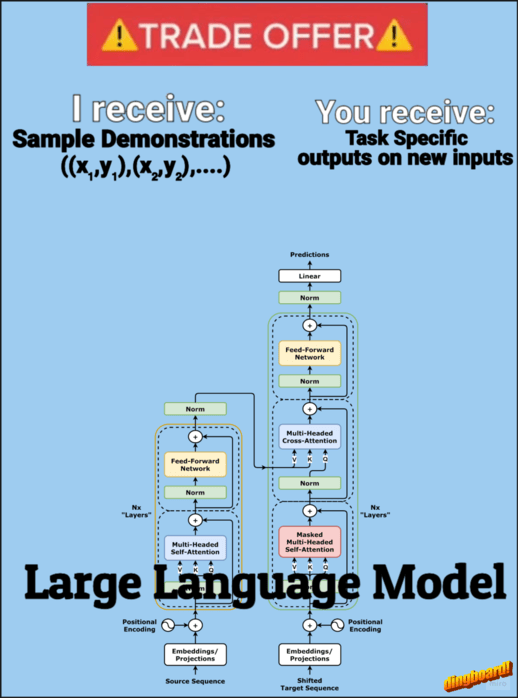

From attention to gradient descent: unraveling how transformers learn from examples

6 min read -

Breaking the Quadratic Barrier: Modern Alternatives to Softmax Attention

8 min read